Networked applications#

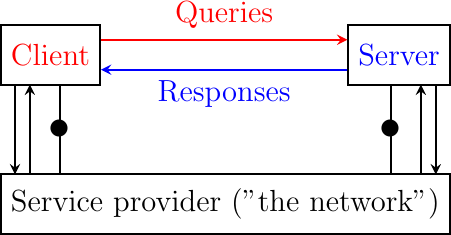

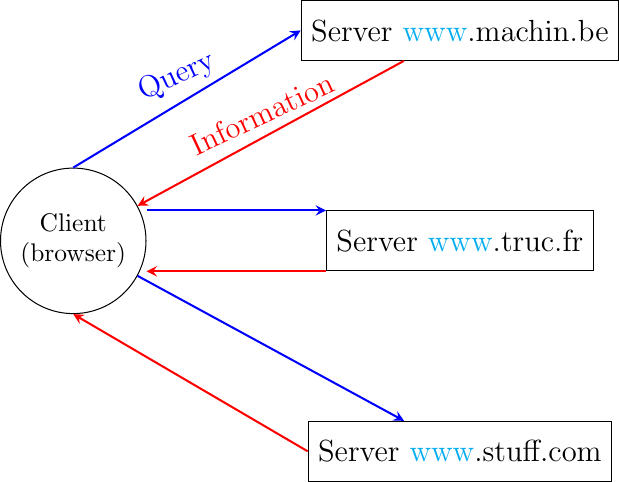

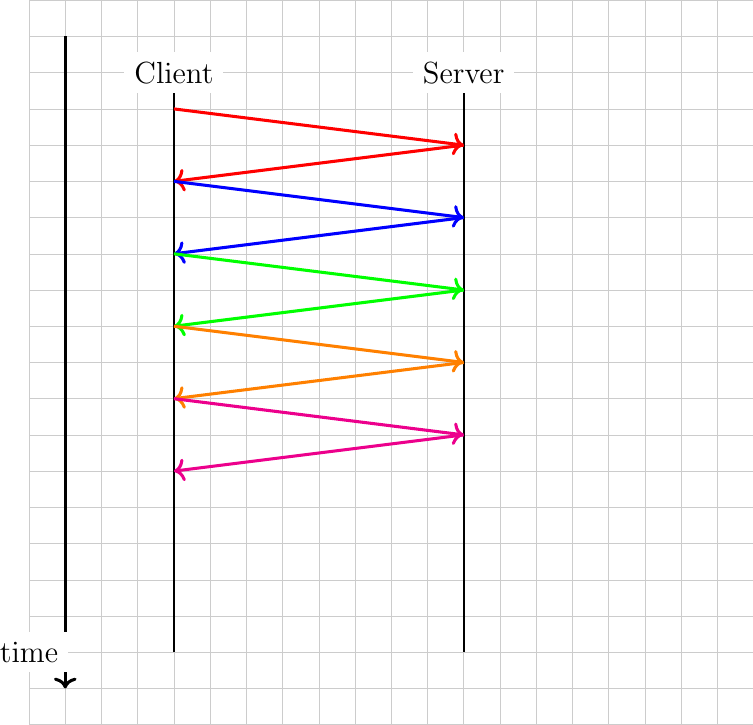

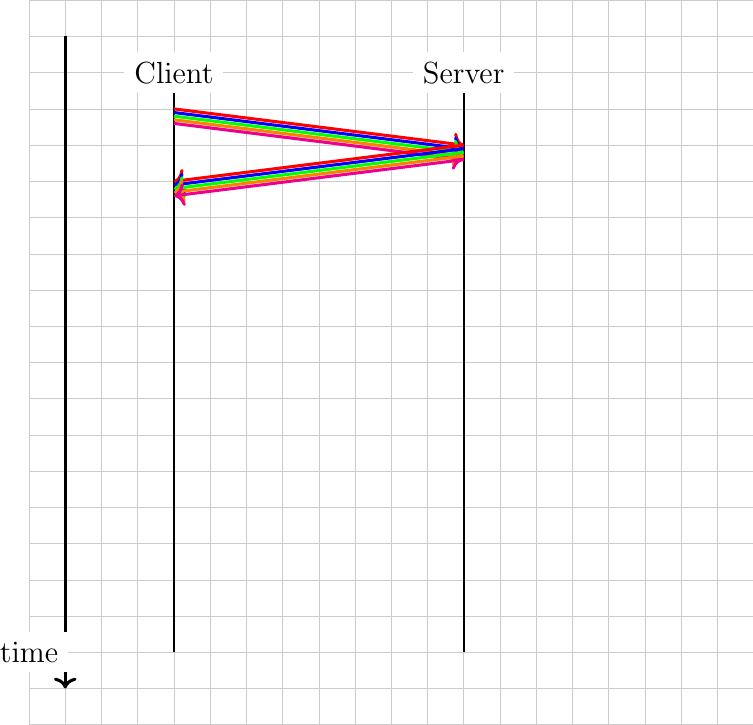

There are two important models used to organize a networked application. The first and oldest model is the client-server model. In this model, a server provides services to clients that exchange information with it. This model is highly asymmetrical: clients send requests and servers perform actions and return responses. It is illustrated in the Fig. 20.

Fig. 20 The client-server model

The client-server model was the first model to be used to develop networked applications. This model comes naturally from the mainframes and minicomputers that were the only networked computers used until the 1980s. A minicomputer is a multi-user system that is used by tens or more users at the same time. Each user interacts with the minicomputer by using a terminal. Such a terminal was mainly a screen, a keyboard and a cable directly connected to the minicomputer.

There are various types of servers as well as various types of clients. A web server provides information in response to the query sent by its clients. A print server prints documents sent as queries by the client. An email server forwards towards their recipient the email messages sent as queries while a music server delivers the music requested by the client. From the viewpoint of the application developer, the client and the server applications directly exchange messages (the horizontal arrows labeled Queries and Responses in the above figure), but in practice these messages are exchanged thanks to the underlying layers (the vertical arrows in the above figure). In this chapter, we focus on these horizontal exchanges of messages.

Networked applications do not exchange random messages. In order to ensure that the server is able to understand the queries sent by a client, and also that the client is able to understand the responses sent by the server, they must both agree on a set of syntactical and semantic rules. These rules define the format of the messages exchanged as well as their ordering. This set of rules is called an application-level protocol.

An application-level protocol is similar to a structured conversation between humans. Assume that Alice wants to know the current time but does not have a watch. If Bob passes close by, the following conversation could take place :

Alice : Hello

Bob : Hello

Alice : What time is it ?

Bob : 11:55

Alice : Thank you

Bob : You’re welcome

Such a conversation succeeds if both Alice and Bob speak the same language. If Alice meets Tchang who only speaks Chinese, she won’t be able to ask him the current time. A conversation between humans can be more complex. For example, assume that Bob is a security guard whose duty is to only allow trusted secret agents to enter a meeting room. If all agents know a secret password, the conversation between Bob and Trudy could be as follows :

Bob : What is the secret password ?

Trudy : 1234

Bob : This is the correct password, you’re welcome

If Alice wants to enter the meeting room but does not know the password, her conversation could be as follows :

Bob : What is the secret password ?

Alice : 3.1415

Bob : This is not the correct password.

Human conversations can be very formal, e.g. when soldiers communicate with their hierarchy, or informal such as when friends discuss. Computers that communicate are more akin to soldiers and require well-defined rules to ensure a successful exchange of information. There are two types of rules that define how information can be exchanged between computers :

Syntactical rules that precisely define the format of the messages that are exchanged. As computers only process bits, the syntactical rules specify how information is encoded as bit strings.

Organization of the information flow. For many applications, the flow of information must be structured and there are precedence relationships between the different types of information. In the time example above, Alice must greet Bob before asking for the current time. Alice would not ask for the current time first and greet Bob afterwards. Such precedence relationships exist in networked applications as well. For example, a server must receive a username and a valid password before accepting more complex commands from its clients.

Let us first discuss the syntactical rules. We will later explain how the information flow can be organized by analyzing real networked applications.

Application-layer protocols exchange two types of messages. Some protocols such as those used to support electronic mail exchange messages expressed as strings or lines of characters. As the transport layer allows hosts to exchange bytes, they need to agree on a common representation of the characters. The first and simplest method to encode characters is to use the ASCII table. RFC 20 provides the ASCII table that is used by many protocols on the Internet. For example, the table defines the following binary representations :

A : 1000011b

0 : 0110000b

z : 1111010b

@ : 1000000b

space : 0100000b

In addition, the ASCII table also defines several non-printable or control characters. These characters were designed to allow an application to control a printer or a terminal. These control characters include CR and LF, that are used to terminate a line, and the Bell character which causes the terminal to emit a sound.

carriage return (CR) : 0001101b

line feed (LF) : 0001010b

Bell: 0000111b

The ASCII characters are encoded as a seven bits field, but transmitted as an eight-bits byte whose high order bit is usually set to 0. Bytes are always transmitted starting from the high order or most significant bit.

Most applications exchange strings that are composed of fixed or variable numbers of characters. A common solution to define the character strings that are acceptable is to define them as a grammar using a Backus-Naur Form (BNF) such as the Augmented BNF defined in RFC 5234. A BNF is a set of production rules that generate all valid character strings. For example, consider a networked application that uses two commands, where the user can supply a username and a password. The BNF for this application could be defined as shown in Fig. 21.

Fig. 21 A simple BNF specification#

The example above defines several terminals and two commands : usercommand and passwordcommand. The ALPHA terminal contains all letters in upper and lower case. In the ALPHA rule, %x41 corresponds to ASCII character code 41 in hexadecimal, i.e. capital A. The CR and LF terminals correspond to the carriage return and linefeed control characters. The CRLF rule concatenates these two terminals to match the standard end of line termination. The DIGIT terminal contains all digits. The SP terminal corresponds to the white space characters. The usercommand is composed of two strings separated by white space. In the ABNF rules that define the messages used by Internet applications, the commands are case-insensitive. The rule “user” corresponds to all possible cases of the letters that compose the word between brackets, e.g. user, uSeR, USER, usER, … A username contains at least one letter and up to 8 letters. User names are case-sensitive as they are not defined as a string between brackets. The password rule indicates that a password starts with a letter and can contain any number of letters or digits. The white space and the control characters cannot appear in a password defined by the above rule.

Besides character strings, some applications also need to exchange 16 bits and 32 bits fields such as integers. A naive solution would have been to send the 16- or 32-bits field as it is encoded in the host’s memory. Unfortunately, there are different methods to store 16- or 32-bits fields in memory. Some CPUs store the most significant byte of a 16-bits field in the first address of the field while others store the least significant byte at this location. When networked applications running on different CPUs exchange 16 bits fields, there are two possibilities to transfer them over the transport service :

send the most significant byte followed by the least significant byte

send the least significant byte followed by the most significant byte

The first possibility was named big-endian in a note written by Cohen [Cohen1980] while the second was named little-endian. Vendors of CPUs that used big-endian in memory insisted on using big-endian encoding in networked applications while vendors of CPUs that used little-endian recommended the opposite. Several studies were written on the relative merits of each type of encoding, but the discussion became almost a religious issue [Cohen1980]. Eventually, the Internet chose the big-endian encoding, i.e. multi-byte fields are always transmitted by sending the most significant byte first, RFC 791 refers to this encoding as the network-byte order. Most libraries [9] used to write networked applications contain functions to convert multi-byte fields from memory to the network byte order and the reverse.

Besides 16 and 32 bit words, some applications need to exchange data structures containing bit fields of various lengths. For example, a message may be composed of a 16 bits field followed by eight, one bit flags, a 24 bits field and two 8 bits bytes. Internet protocol specifications will define such a message by using a representation such as Fig. 22. In this representation, each line corresponds to 32 bits and the vertical lines are used to delineate fields. The numbers above the lines indicate the bit positions in the 32-bits word, with the high order bit at position 0.

Fig. 22 Message format#

The message mentioned above will be transmitted starting from the upper 32-bits word in network byte order. The first field is encoded in 16 bits. It is followed by eight one bit flags (A-H), a 24 bits field whose high order byte is shown in the first line and the two low order bytes appear in the second line followed by two one byte fields. This ASCII representation is frequently used when defining binary protocols. We will use it for all the binary protocols that are discussed in this book.

The peer-to-peer model emerged during the last ten years as another possible architecture for networked applications. In the traditional client-server model, hosts act either as servers or as clients and a server serves a large number of clients. In the peer-to-peer model, all hosts act as both servers and clients and they play both roles. The peer-to-peer model has been used to develop various networked applications, ranging from Internet telephony to file sharing or Internet-wide filesystems. A detailed description of peer-to-peer applications may be found in [BYL2008]. Surveys of peer-to-peer protocols and applications may be found in [AS2004] and [LCP2005].

Naming and addressing#

The network and the transport layers rely on addresses that are encoded as fixed-size bit strings. A network layer address uniquely identifies a host. Several transport layer entities can use the service of the same network layer. For example, a reliable transport protocol and a connectionless transport protocol can coexist on the same host. In this case, the network layer multiplexes the segments produced by these two protocols. This multiplexing is usually achieved by placing in the network packet header a field that indicates which transport protocol should process the segment. Given that there are few different transport protocols, this field does not need to be long. The port numbers play a similar role in the transport layer since they enable it to multiplex data from several application processes.

While addresses are natural for the network and transport layer entities, humans prefer to use names when interacting with network services. Names can be encoded as a character string, and a mapping service allows applications to map a name into the corresponding address. Using names is friendlier for humans, but it also provides a level of indirection which is very useful in many situations.

In the early days of the Internet, only a few hosts (mainly minicomputers) were connected to the network. The most popular applications were remote login and file transfer. By 1983, there were already five hundred hosts attached to the Internet [Zakon]. Each of these hosts was identified by a unique address. Forcing human users to remember the addresses of the hosts that they wanted to use was not user-friendly. Humans prefer to remember names and use them when needed. Using names as aliases for addresses is a common technique in Computer Science. It simplifies the development of applications and allows the developer to ignore the low-level details. For example, by using a programming language instead of writing machine code, a developer can write software without knowing whether the variables that it uses are stored in memory or inside registers.

Because names are at a higher level than addresses, they allow (both in the example of programming above and on the Internet) to treat addresses as mere technical identifiers, which can change at will. Only the names are stable.

The first solution that allowed applications to use names was the hosts.txt file. This file is similar to the symbol table found in compiled code. It contains the mapping between the name of each Internet host and its associated address [1]. It was maintained by the SRI International Network Information Center (NIC). When a new host was connected to the network, the system administrator had to register its name and address at the NIC. The NIC updated the hosts.txt file on its server. All Internet hosts regularly retrieved the updated hosts.txt file from the SRI server. This file was stored at a well-known location on each Internet host (see RFC 952) and networked applications could use it to find the address corresponding to a name.

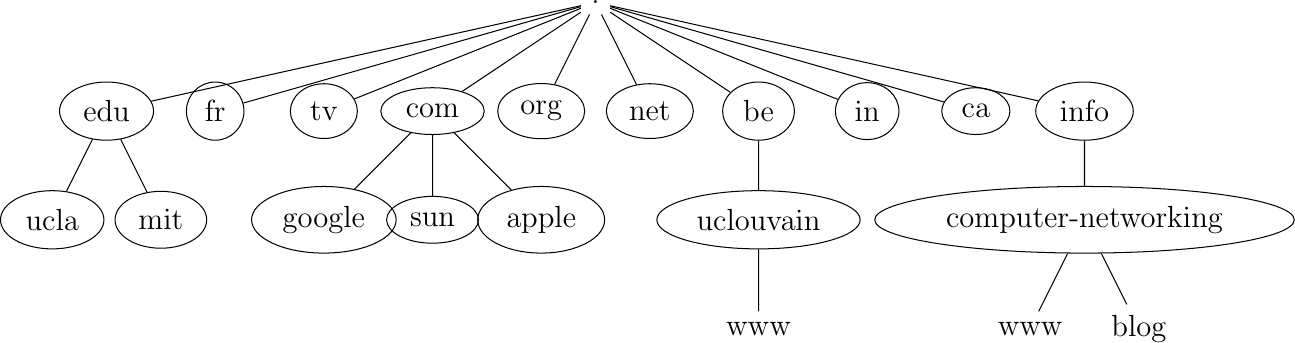

A hosts.txt file can be used when there are up to a few hundred hosts on the network. However, it is clearly not suitable for a network containing thousands or millions of hosts. A key issue in a large network is to define a suitable naming scheme. The ARPANet initially used a flat naming space, i.e. each host was assigned a unique name. To limit collisions between names, these names usually contained the name of the institution and a suffix to identify the host inside the institution (a kind of poor man’s hierarchical naming scheme). On the ARPANet, few institutions had several hosts connected to the network.

However, the limitations of a flat naming scheme became clear before the end of the ARPANet, and RFC 819 proposed a hierarchical naming scheme. While RFC 819 discussed the possibility of organizing the names as a directed graph, the Internet opted for a tree structure capable of containing all names. In this tree, the top-level domains are those that are directly attached to the root. The first top-level domain was .arpa [2]. This top-level name was initially added as a suffix to the names of the hosts attached to the ARPANet and listed in the hosts.txt file. In 1984, the .gov, .edu, .com, .mil, and .org generic top-level domain names were added. RFC 1032 proposed the utilization of the two-letter ISO-3166 country codes as top-level domain names. Since ISO-3166 defines a two-letter code for each country recognized by the United Nations, this allowed all countries to automatically have a top-level domain. These domains include .be for Belgium, .fr for France, .us for the USA, .ie for Ireland, or .tv for Tuvalu, a group of small islands in the Pacific, or .tm for Turkmenistan. The set of top-level domain names is managed by the Internet Corporation for Assigned Names and Numbers (ICANN). ICANN adds generic top-level domains that are not related to a country, and the .cat top-level domain has been registered for the Catalan language. There are ongoing discussions within ICANN to increase the number of top-level domains.

Each top-level domain is managed by an organization that decides how subdomain names can be registered. Most top-level domain names use a first-come, first-served system and allow anyone to register domain names, but there are some exceptions. For example, .gov is reserved for the US government, .int is reserved for international organizations, and names in the .ca are mainly reserved for companies or users that are present in Canada.

Fig. 23 The tree of domain names

The syntax of the domain names has been defined more precisely in RFC 1035. This document recommends the following BNF for fully qualified domain names (the domain names themselves have a much richer syntax).

domain ::= subdomain | " "

subdomain ::= label | subdomain "." label

label ::= letter [ [ ldh-str ] let-dig ]

ldh-str ::= let-dig-hyp | let-dig-hyp ldh-str

let-dig-hyp ::= let-dig | "-"

let-dig ::= letter | digit

letter ::= any one of the 52 alphabetic characters A through Z in upper case and a through z in lower case

digit ::= any one of the ten digits 0 through 9

This grammar specifies that a host name is an ordered list of labels separated by the dot (.) character. Each label can contain letters, numbers, and the hyphen character (-) [3]. Fully qualified domain names are read from left to right. The first label is a hostname or a domain name followed by the hierarchy of domains and ending with the root implicitly at the right. The top-level domain name must be one of the registered TLDs [4]. For example, in the above figure, www.computer-networking.info corresponds to a host named www inside the computer-networking domain that belongs to the info top-level domain.

Note

Some visually similar characters have different character codes

The Domain Name System was created at a time when the Internet was mainly used in North America. The initial design assumed that all domain names would be composed of letters and digits RFC 1035. As Internet usage grew in other parts of the world, it became important to support non-ASCII characters. For this, extensions have been proposed to the Domain Name System RFC 3490. In a nutshell, the solution that is used to support Internationalized Domain Names works as follows. First, it is possible to use most of the Unicode characters to encode domain names and hostnames, with a few exceptions (for example, the dot character cannot be part of a name since it is used as a separator). Once a domain name has been encoded as a series of Unicode characters, it is then converted into a string that contains the xn-- prefix and a sequence of ASCII characters. More details on these algorithms can be found in RFC 3490 and RFC 3492.

The possibility of using all Unicode characters to create domain names opened a new form of attack called the homograph attack. This attack occurs when two character strings or domain names are visually similar but do not correspond to the same server. A simple example is https://G00GLE.COM and https://GOOGLE.COM. These two URLs are visually close but they correspond to different names (the first one does not point to a valid server [5]). With other Unicode characters, it is possible to construct domain names that are visually equivalent to existing ones. See [Zhe2017] for additional details on this attack.

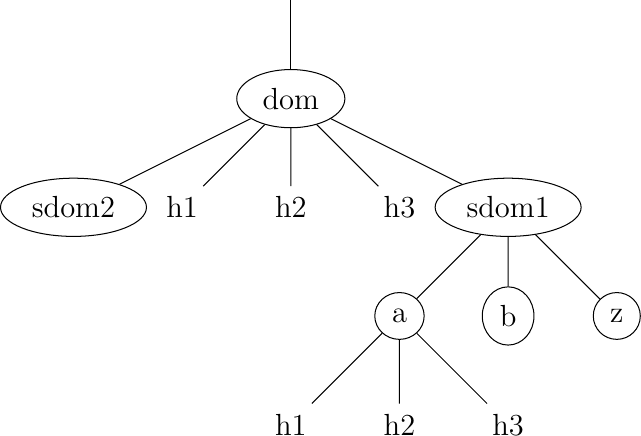

This hierarchical naming scheme is a key component of the Domain Name System (DNS). The DNS is a distributed database that contains mappings between fully qualified domain names and addresses. The DNS uses the client-server model. The clients are hosts or applications that need to retrieve the mapping for a given name. Each nameserver stores part of the distributed database and answers the queries sent by clients. There is at least one nameserver that is responsible for each domain. In the figure below, domains are represented by circles and there are three hosts inside domain dom (h1, h2, and h3) and three hosts inside domain a.sdom1.dom. As shown in the figure below, a sub-domain may contain both host names and sub-domains.

Fig. 24 A simple tree of domain names

A nameserver that is responsible for domain dom can directly answer the following queries :

the address of any host residing directly inside domain dom (e.g. h2.dom in the figure above)

the nameserver(s) that are responsible for any direct sub-domain of domain dom (i.e. sdom1.dom and sdom2.dom in the figure above, but not z.sdom1.dom)

To retrieve the mapping for host h2.dom, a client sends its query to the name server that is responsible for the domain .dom. The name server directly answers the query. To retrieve a mapping for h3.a.sdom1.dom, a DNS client first sends a query to the name server that is responsible for the .dom domain. This nameserver returns the nameserver that is responsible for the sdom1.dom domain. This nameserver can now be contacted to obtain the nameserver that is responsible for the a.sdom1.dom domain. This nameserver can be contacted to retrieve the mapping for the h3.a.sdom1.dom name. Thanks to this structure, it is possible for a DNS client to obtain the mapping of any host inside the .dom domain or any of its subdomains. To ensure that any DNS client will be able to resolve any fully qualified domain name, there are special nameservers that are responsible for the root of the domain name hierarchy. These nameservers are called root nameserver.

Each root nameserver maintains the list [6] of all the nameservers that are responsible for each of the top-level domain names and their addresses [7]. All root nameservers cooperate and provide the same answers. By querying any of the root nameservers, a DNS client can obtain the nameserver that is responsible for any top-level-domain name. From this nameserver, it is possible to resolve any domain name.

To be able to contact the root nameservers, each DNS client must know their addresses. This implies that DNS clients must maintain an up-to-date list of the addresses of the root nameservers. Without this list, it is impossible to contact the root nameservers. Forcing all Internet hosts to maintain the most recent version of this list would be difficult from an operational point of view. To solve this problem, the designers of the DNS introduced a special type of DNS server : the DNS resolvers. A resolver is a server that provides the name resolution service for a set of clients. A network usually contains a few resolvers. Each host in these networks is configured to send all its DNS queries via one of its local resolvers. These queries are called recursive queries as the resolver must recursively send requests through the hierarchy of nameservers to obtain the answer.

DNS resolvers have several advantages over letting each Internet host query directly nameservers. Firstly, regular Internet hosts do not need to maintain the up-to-date list of the addresses of the root servers. Secondly, regular Internet hosts do not need to send queries to nameservers all over the Internet. Furthermore, as a DNS resolver serves a large number of hosts, it can cache the received answers. This allows the resolver to quickly return answers for popular DNS queries and reduces the load on all DNS servers [JSBM2002].

Benefits of names#

In addition to being more human-friendly, using names instead of addresses inside applications has several important benefits. To understand them, let us consider a popular application that provides information stored on servers. This application involves clients and servers. The server provides information upon requests from client processes. A first deployment of this application would be to rely only on addresses. In this case, the server process would be installed on one host, and the clients would connect to this server to retrieve information. Such a deployment has several drawbacks :

if the server process moves to another physical server, all clients must be informed about the new server address.

if there are many concurrent clients, the load of the server will increase without any possibility of adding another server without changing the server addresses used by the clients.

Using names solves these problems and provides additional benefits. If the clients are configured with the name of the server, they will query the name service before contacting the server. The name service will resolve the name into the corresponding address. If a server process needs to move from one physical server to another, it suffices to update the name-to-address mapping on the name service to allow all clients to connect to the new server. The name service also enables the servers to better sustain the load. Assume a very popular server which is accessed by millions of users. This service cannot be provided by a single physical server due to performance limitations. Thanks to the utilization of names, it is possible to scale this service by mapping a given name to a set of addresses. When a client queries the name service for the server’s name, the name service returns one of the addresses in the set. Various strategies can be used to select one particular address inside the set of addresses. A first strategy is to select a random address in the set. A second strategy is to maintain information about the load on the servers and return the address of the less loaded server. Note that the list of server addresses does not need to remain fixed. It is possible to add and remove addresses from the list to cope with load fluctuations. Another strategy is to infer the location of the client from the name request and return the address of the closest server.

Mapping a single name onto a set of addresses allows popular servers to dynamically scale. There are also benefits in mapping multiple names, possibly a large number of them, onto a single address. Consider the case of information servers run by individuals or SMEs. Some of these servers attract only a few clients per day. Using a single physical server for each of these services would be a waste of resources. A better approach is to use a single server for a set of services that are all identified by different names. This enables service providers to support a large number of server processes, identified by different names, onto a single physical server. If one of these server processes becomes very popular, it will be possible to map its name onto a set of addresses to be able to sustain the load. There are some deployments where this mapping is done dynamically in function of the load.

Names provide a lot of flexibility compared to addresses. For the network, they play a similar role as variables in programming languages. No programmer using a high-level programming language would consider using hardcoded values instead of variables. For the same reasons, all networked applications should depend on names and avoid dealing with addresses as much as possible.

The Domain Name System#

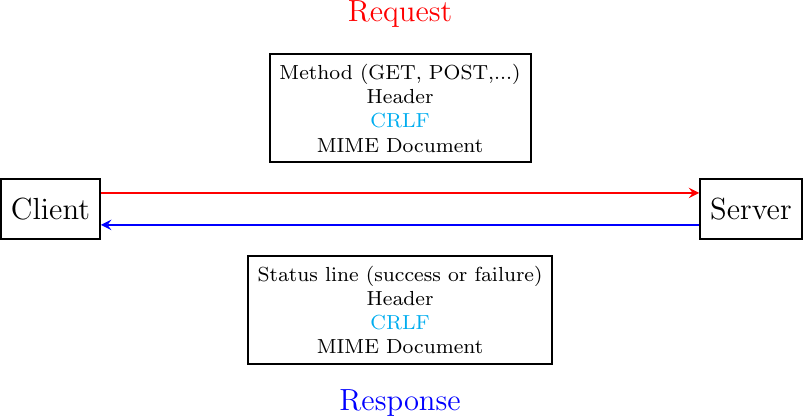

The last component of the Domain Name System is the DNS protocol. The original DNS protocol runs above both the datagram and the bytestream services. In practice, the datagram service is used when short queries and responses are exchanged, and the bytestream service is used when longer responses are expected. In this section, we first focus on the utilization of the DNS protocol above the datagram service. We will discuss later other recently proposed protocols to carry DNS information.

DNS messages are composed of five parts that are named sections in RFC :1035. The first three sections are mandatory, and the last two sections are optional. The first section of a DNS message is its Header. It contains information about the message type and the content of the other sections. The second section contains the Question sent to the nameserver or resolver. The third section contains the Answer to the Question. When a client sends a DNS query, the Answer section is empty. The fourth section, named Authority, contains information about the servers that can provide an authoritative answer if required. The last section contains additional information that is supplied by the resolver or nameserver but was not requested in the question.

The header of DNS messages is composed of 12 bytes. The figure below presents its structure.

Fig. 25 The DNS header#

The Transaction ID (transaction identifier) is a 16-bit random value chosen by the client. When a client sends a question to a DNS server, it remembers the question and its identifier. When a server returns an answer, it returns in the Transaction ID field the identifier chosen by the client. Thanks to this identifier, the client can match the received answer with the question that it sent.

The DNS header contains a series of flags. The QR flag is used to distinguish between queries and responses. It is set to 0 in DNS queries and 1 in DNS answers. The Opcode is used to specify the query type. For instance, a standard query is used when a client sends a name and the server returns the corresponding data. An update request is used when the client sends a name and new data, and the server then updates its database.

The AA bit is set when the server that sent the response has authority for the domain name found in the question section. In the original DNS deployments, two types of servers were considered : authoritative servers and non-authoritative servers. The authoritative servers are managed by the system administrators responsible for a given domain. They always store the most recent information about a domain. Non-authoritative servers are servers or resolvers that store DNS information about external domains without being managed by the owners of a domain. They may thus provide answers that are out of date. From a security point of view, the authoritative bit is not an absolute indication about the validity of an answer. Securing the Domain Name System is a complex problem that was only addressed satisfactorily recently by the utilization of cryptographic signatures in the DNSSEC extensions to DNS described in : RFC:4033.

The RD (recursion desired) bit is set by a client when it sends a query to a resolver. Such a query is said to be recursive because the resolver will recursively traverse the DNS hierarchy to retrieve the answer on behalf of the client. In the past, all resolvers were configured to perform recursive queries on behalf of any Internet host. However, this exposes the resolvers to several security risks. The simplest one is that the resolver could become overloaded by having too many recursive queries to process. Most resolvers [8] only allow recursive queries from clients belonging to their company or network and discard all other recursive queries. The RA bit indicates whether the server supports recursion. The RCODE is used to distinguish between different types of errors. See : RFC:1035 for additional details. The last four fields indicate the size of the Question, Answer, Authority, and Additional sections of the DNS message.

The last four sections of the DNS message contain Resource Records (RR). All RRs have the same top-level format shown in the figure below.

Fig. 26 DNS Resource Records#

In a Resource Record (RR), the Name indicates the name of the node to which this resource record pertains. The two-byte Type field indicates the type of resource record. The Class field was used to support the utilization of the DNS in other environments than the Internet. The IN Class refers to Internet names.

The TTL field indicates the lifetime of the Resource Record in seconds. This field is set by the server that returns an answer and indicates for how long a client or a resolver can store the Resource Record inside its cache. A long TTL indicates a stable RR. Some companies use short TTL values for mobile hosts and also for popular servers. For example, a web hosting company that wants to spread the load over a pool of hundred servers can configure its nameservers to return different answers to different clients. If each answer has a small TTL, the clients will be forced to send DNS queries regularly. The nameserver will reply to these queries by supplying the address of the less loaded server.

The RDLength field is the length of the RData field that contains the information of the type specified in the Type field.

Several types of DNS RR are used in practice. The A type encodes the IPv4 address that corresponds to the specified name. The AAAA type encodes the IPv6 address that corresponds to the specified name. A NS record contains the name of the DNS server that is responsible for a given domain. For example, a query for the AAAA record associated with the www.ietf.org name returned the following answer:

Fig. 27 Query for the AAAA record of www.ietf.org#

This answer contains several pieces of information. First, the name www.ietf.org is associated with the IP address 2001:1890:123a::1:1e. Second, the ietf.org domain is managed by six different nameservers. Five of these nameservers are reachable via IPv4 and IPv6.

CNAME (or canonical names) are used to define aliases. For example, www.example.com could be a CNAME for pc12.example.com, which is the actual name of the server on which the web server for www.example.com runs.

Note

Reverse DNS

The DNS is mainly used to find the address that corresponds to a given name. However, it is sometimes useful to obtain the name that corresponds to an IP address. This is done by using the PTR (pointer) RR. The RData part of a PTR RR contains the name while the Name part of the RR contains the IP address encoded in the in-addr.arpa domain. IPv4 addresses are encoded in the in-addr.arpa by reversing the four digits that compose the dotted decimal representation of the address. For example, consider IPv4 address 192.0.2.11. The hostname associated to this address can be found by requesting the PTR RR that corresponds to 11.2.0.192.in-addr.arpa. A similar solution is used to support IPv6 addresses : RFC:3596, but slightly more complex given the length of the IPv6 addresses. For example, consider IPv6 address 2001:1890:123a::1:1e. To obtain the name that corresponds to this address, we need first to convert it in a reverse dotted decimal notation : e.1.0.0.1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.a.3.2.1.0.9.8.1.1.0.0.2. In this notation, each character between dots corresponds to one nibble, i.e. four bits. The low-order byte (e) appears first and the high order (2) last. To obtain the name that corresponds to this address, one needs to append the ip6.arpa domain name and query for e.1.0.0.1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.a.3.2.1.0.9.8.1.1.0.0.2.ip6.arpa. In practice, tools and libraries do the conversion automatically and the user does not need to worry about it.

An important point to note regarding the Domain Name System is that it is extensible. Thanks to the Type and RDLength fields, the format of the Resource Records can easily be extended. Furthermore, a DNS implementation that receives a new Resource Record that it does not understand can ignore the record while still being able to process the other parts of the message. This allows, for example, a DNS server that only supports IPv6 to safely ignore the IPv4 addresses listed in the DNS reply for www.ietf.org while still being able to correctly parse the Resource Records that it understands. This allowed the Domain Name System to evolve over the years while still preserving the backward compatibility with already deployed DNS implementations.

Electronic mail#

Electronic mail, or email, is a very popular application in computer networks such as the Internet. Email appeared in the early 1970s and allows users to exchange text based messages. Initially, it was mainly used to exchange short messages, but over the years its usage has grown. It is now not only used to exchange small, but also long messages that can be composed of several parts as we will see later.

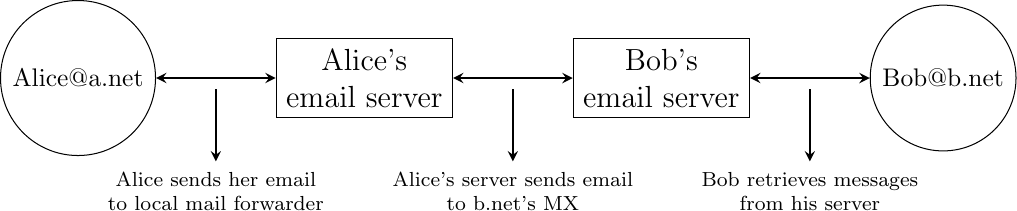

Before looking at the details of Internet email, let us consider a simple scenario illustrated in Fig. 28, where Alice sends an email to Bob. Alice prepares her email by using an email clients and sends it to her email server. Alice’s email server extracts Bob’s address from the email and delivers the message to Bob’s server. Bob retrieves Alice’s message on his server and reads it by using his favorite email client or through his webmail interface.

Fig. 28 Simplified architecture of the Internet email

The email system that we consider in this book is composed of four components :

a message format, that defines how valid email messages are encoded

protocols, that allow hosts and servers to exchange email messages

client software, that allows users to easily create and read email messages

software, that allows servers to efficiently exchange email messages

We first discuss the format of email messages followed by the protocols that are used on today’s Internet to exchange and retrieve emails. Other email systems have been developed in the past [Bush1993] [Genilloud1990] [GC2000], but today most email solutions have migrated to the Internet email. Information about the software that is used to compose and deliver emails may be found on wikipedia among others, for both email clients and email servers. More detailed information about the full Internet Mail Architecture may be found in RFC 5598.

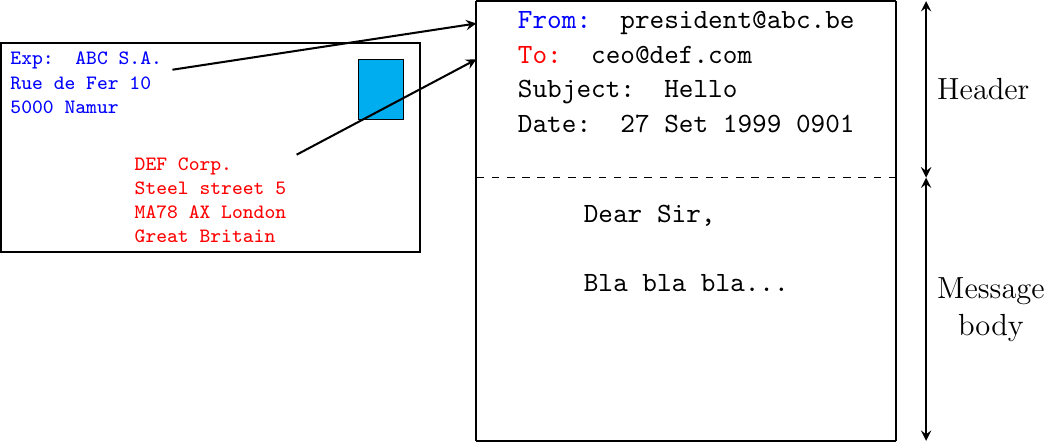

Email messages, like postal mail, are composed of two parts :

a header that plays the same role as the letterhead in regular mail. It contains metadata about the message.

the body that contains the message itself.

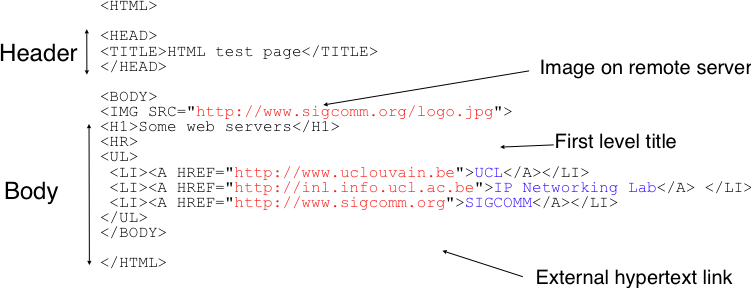

Email messages are entirely composed of lines of ASCII characters. Each line can contain up to 998 characters and is terminated by the CR and LF control characters RFC 5322. The lines that compose the header appear before the message body. An empty line, containing only the CR and LF characters, marks the end of the header. This is illustrated in Fig. 29.

Fig. 29 The structure of email messages

The email header contains several lines that all begin with a keyword followed by a colon and additional information. The format of email messages and the different types of header lines are defined in RFC 5322. Two of these header lines are mandatory and must appear in all email messages :

The sender address. This header line starts with From:. This contains the (optional) name of the sender followed by its email address between < and >. Email addresses are always composed of a username followed by the @ sign and a domain name.

The date. This header line starts with Date:. RFC 5322 precisely defines the format used to encode a date.

Other header lines appear in most email messages. The Subject: header line allows the sender to indicate the topic discussed in the email. Three types of header lines can be used to specify the recipients of a message :

the To: header line contains the email addresses of the primary recipients of the message [10]. Several addresses can be separated by using commas.

the cc: header line is used by the sender to provide a list of email addresses that must receive a carbon copy of the message. Several addresses can be listed in this header line, separated by commas. All recipients of the email message receive the To: and cc: header lines.

the bcc: header line is used by the sender to provide a list of comma separated email addresses that must receive a blind carbon copy of the message. The bcc: header line is not delivered to the recipients of the email message.

A simple email message containing the From:, To:, Subject: and Date: header lines and two lines of body is shown below.

From: Bob Smith <Bob@machine.example>

To: Alice Doe <alice@example.net>, Alice Smith <Alice@machine.example>

Subject: Hello

Date: Mon, 8 Mar 2010 19:55:06 -0600

This is the "Hello world" of email messages.

This is the second line of the body

Note the empty line after the Date: header line; this empty line contains only the CR and LF characters, and marks the boundary between the header and the body of the message.

Several other optional header lines are defined in RFC 5322 and elsewhere [11]. Furthermore, many email clients and servers define their own header lines starting from X-. Several of the optional header lines defined in RFC 5322 are worth being discussed here :

the Message-Id: header line is used to associate a “unique” identifier to each email. Email identifiers are usually structured like string@domain where string is a unique character string or sequence number chosen by the sender of the email and domain the domain name of the sender. Since domain names are unique, a host can generate globally unique message identifiers concatenating a locally unique identifier with its domain name.

the In-reply-to: header line is used when a message was created in reply to a previous message. In this case, the end of the In-reply-to: line contains the identifier of the original message.

the Received: header line is used when an email message is processed by several servers before reaching its destination. Each intermediate email server adds a Received: header line. These header lines are useful to debug problems in delivering email messages.

The figure below shows the header lines of one email message. The message originated at a host named wira.firstpr.com.au and was received by smtp3.sgsi.ucl.ac.be. The Received: lines have been wrapped for readability.

Received: from smtp3.sgsi.ucl.ac.be (Unknown [10.1.5.3])

by mmp.sipr-dc.ucl.ac.be

(Sun Java(tm) System Messaging Server 7u3-15.01 64bit (built Feb 12 2010))

with ESMTP id <0KYY00L85LI5JLE0@mmp.sipr-dc.ucl.ac.be>; Mon,

08 Mar 2010 11:37:17 +0100 (CET)

Received: from mail.ietf.org (mail.ietf.org [64.170.98.32])

by smtp3.sgsi.ucl.ac.be (Postfix) with ESMTP id B92351C60D7; Mon,

08 Mar 2010 11:36:51 +0100 (CET)

Received: from [127.0.0.1] (localhost [127.0.0.1]) by core3.amsl.com (Postfix)

with ESMTP id F066A3A68B9; Mon, 08 Mar 2010 02:36:38 -0800 (PST)

Received: from localhost (localhost [127.0.0.1]) by core3.amsl.com (Postfix)

with ESMTP id A1E6C3A681B for <rrg@core3.amsl.com>; Mon,

08 Mar 2010 02:36:37 -0800 (PST)

Received: from mail.ietf.org ([64.170.98.32])

by localhost (core3.amsl.com [127.0.0.1]) (amavisd-new, port 10024)

with ESMTP id erw8ih2v8VQa for <rrg@core3.amsl.com>; Mon,

08 Mar 2010 02:36:36 -0800 (PST)

Received: from gair.firstpr.com.au (gair.firstpr.com.au [150.101.162.123])

by core3.amsl.com (Postfix) with ESMTP id 03E893A67ED for <rrg@irtf.org>; Mon,

08 Mar 2010 02:36:35 -0800 (PST)

Received: from [10.0.0.6] (wira.firstpr.com.au [10.0.0.6])

by gair.firstpr.com.au (Postfix) with ESMTP id D0A49175B63; Mon,

08 Mar 2010 21:36:37 +1100 (EST)

Date: Mon, 08 Mar 2010 21:36:38 +1100

From: Robin Whittle <rw@firstpr.com.au>

Subject: Re: [rrg] Recommendation and what happens next

In-reply-to: <C7B9C21A.4FAB%tony.li@tony.li>

To: RRG <rrg@irtf.org>

Message-id: <4B94D336.7030504@firstpr.com.au>

Message content removed

Initially, email was used to exchange small messages of ASCII text between computer scientists. However, with the growth of the Internet, supporting only ASCII text became a severe limitation for two reasons. First of all, non-English speakers wanted to write emails in their native language that often required more characters than those of the ASCII character table. Second, many users wanted to send other content than just ASCII text by email such as binary files, images or sound.

To solve this problem, the IETF developed the Multipurpose Internet Mail Extensions (MIME). These extensions were carefully designed to allow Internet email to carry non-ASCII characters and binary files without breaking the email servers that were deployed at that time. This requirement for backward compatibility forced the MIME designers to develop extensions to the existing email message format RFC 822 instead of defining a completely new format that would have been better suited to support the new types of emails.

RFC 2045 defines three new types of header lines to support MIME :

The MIME-Version: header line indicates the version of the MIME specification that was used to encode the email message. The current version of MIME is 1.0. Other versions of MIME may be defined in the future. Thanks to this header line, the software that processes email messages will be able to adapt to the MIME version used to encode the message. Messages that do not contain this header are supposed to be formatted according to the original RFC 822 specification.

The Content-Type: header line indicates the type of data that is carried inside the message (see below).

The Content-Transfer-Encoding: header line is used to specify how the message has been encoded. When MIME was designed, some email servers were only able to process messages containing characters encoded using the 7 bits ASCII character set. MIME allows the utilization of other character encodings.

Inside the email header, the Content-Type: header line indicates how the MIME email message is structured. RFC 2046 defines the utilization of this header line. The two most common structures for MIME messages are :

Content-Type: multipart/mixed. This header line indicates that the MIME message contains several independent parts. For example, such a message may contain a part in plain text and a binary file.

Content-Type: multipart/alternative. This header line indicates that the MIME message contains several representations of the same information. For example, a multipart/alternative message may contain both a plain text and an HTML version of the same text.

To support these two types of MIME messages, the recipient of a message must be able to extract the different parts from the message. In RFC 822, an empty line was used to separate the header lines from the body. Using an empty line to separate the different parts of an email body would be difficult as the body of email messages often contains one or more empty lines. Another possible option would be to define a special line, e.g. *-LAST_LINE-* to mark the boundary between two parts of a MIME message. Unfortunately, this is not possible as some emails may contain this string in their body (e.g. emails sent to students to explain the format of MIME messages). To solve this problem, the Content-Type: header line contains a second parameter that specifies the string that has been used by the sender of the MIME message to delineate the different parts. In practice, this string is often chosen randomly by the mail client.

The email message below, copied from RFC 2046 shows a MIME message containing two parts that are both in plain text and encoded using the ASCII character set. The string simple boundary is defined in the Content-Type: header as the marker for the boundary between two successive parts. Another example of MIME messages may be found in RFC 2046.

Date: Mon, 20 Sep 1999 16:33:16 +0200

From: Nathaniel Borenstein <nsb@bellcore.com>

To: Ned Freed <ned@innosoft.com>

Subject: Test

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="simple boundary"

preamble, to be ignored

--simple boundary

Content-Type: text/plain; charset=us-ascii

First part

--simple boundary

Content-Type: text/plain; charset=us-ascii

Second part

--simple boundary

The Content-Type: header can also be used inside a MIME part. In this case, it indicates the type of data placed in this part. Each data type is specified as a type followed by a subtype. A detailed description may be found in RFC 2046. Some of the most popular Content-Type: header lines are :

text. The message part contains information in textual format. There are several subtypes : text/plain for regular ASCII text, text/html defined in RFC 2854 for documents in HTML format or the text/enriched format defined in RFC 1896. The Content-Type: header line may contain a second parameter that specifies the character set used to encode the text. charset=us-ascii is the standard ASCII character table. Other frequent character sets include charset=UTF8 or charset=iso-8859-1. The list of standard character sets is maintained by IANA.

image. The message part contains a binary representation of an image. The subtype indicates the format of the image such as gif, jpg or png.

audio. The message part contains an audio clip. The subtype indicates the format of the audio clip like wav or mp3.

video. The message part contains a video clip. The subtype indicates the format of the video clip like avi or mp4.

application. The message part contains binary information that was produced by the particular application listed as the subtype. Email clients use the subtype to launch the application that is able to decode the received binary information.

Note

From ASCII to Unicode

The first computers used different techniques to represent characters in memory and on disk. During the 1960s, computers began to exchange information via tape or telephone lines. Unfortunately, each vendor had its own proprietary character set and exchanging data between computers from different vendors was often difficult. The 7 bits ASCII character table RFC 20 was adopted by several vendors and by many Internet protocols. However, ASCII became a problem with the internationalization of the Internet and the desire of more and more users to use character sets that support their own written language. A first attempt at solving this problem was the definition of the ISO-8859 character sets by ISO. This family of standards specified various character sets that allowed the representation of many European written languages by using 8 bits characters. Unfortunately, an 8-bits character set is not sufficient to support some widely used languages, such as those used in Asian countries. Fortunately, at the end of the 1980s, several computer scientists proposed to develop a standard that supports all written languages used on Earth today. The Unicode standard [Unicode] has now been adopted by most computer and software vendors. For example, Java always uses Unicode to manipulate characters, Python can handle both ASCII and Unicode characters. Internet applications are slowly moving towards complete support for the Unicode character sets, but moving from ASCII to Unicode is an important change that can have a huge impact on current deployed implementations. See, for example, the work to completely internationalize email RFC 4952 and domain names RFC 5890.

The last MIME header line is Content-Transfer-Encoding:. This header line is used after the Content-Type: header line, within a message part, and specifies how the message part has been encoded. The default encoding is to use 7 bits ASCII. The most frequent encodings are quoted-printable and Base64. Both support encoding a sequence of bytes into a set of ASCII lines that can be safely transmitted by email servers. quoted-printable is defined in RFC 2045. We briefly describe base64 which is defined in RFC 2045 and RFC 4648.

Base64 divides the sequence of bytes to be encoded into groups of three bytes (with the last group possibly being partially filled). Each group of three bytes is then divided into four six-bit fields and each six bit field is encoded as a character from the table below.

Value |

Encoding |

Value |

Encoding |

Value |

Encoding |

Value |

Encoding |

0 |

A |

17 |

R |

34 |

i |

51 |

z |

1 |

B |

18 |

S |

35 |

j |

52 |

0 |

2 |

C |

19 |

T |

36 |

k |

53 |

1 |

3 |

D |

20 |

U |

37 |

l |

54 |

2 |

4 |

E |

21 |

V |

38 |

m |

55 |

3 |

5 |

F |

22 |

W |

39 |

n |

56 |

4 |

6 |

G |

23 |

X |

40 |

o |

57 |

5 |

7 |

H |

24 |

Y |

41 |

p |

58 |

6 |

8 |

I |

25 |

Z |

42 |

q |

59 |

7 |

9 |

J |

26 |

a |

43 |

r |

60 |

8 |

10 |

K |

27 |

b |

44 |

s |

61 |

9 |

11 |

L |

28 |

c |

45 |

t |

62 |

+ |

12 |

M |

29 |

d |

46 |

u |

63 |

/ |

13 |

N |

30 |

e |

47 |

v |

||

14 |

O |

31 |

f |

48 |

w |

||

15 |

P |

32 |

g |

49 |

x |

||

16 |

Q |

33 |

h |

50 |

y |

The example below, from RFC 4648, illustrates the Base64 encoding.

Input data

0x14fb9c03d97e

8-bit

00010100 11111011 10011100 00000011 11011001 01111110

6-bit

000101 001111 101110 011100 000000 111101 100101 111110

Decimal

5 15 46 28 0 61 37 62

Encoding

F P u c A 9 l +

The last point to be discussed about base64 is what happens when the length of the sequence of bytes to be encoded is not a multiple of three. In this case, the last group of bytes may contain one or two bytes instead of three. Base64 reserves the = character as a padding character. This character is used once when the last group contains two bytes and twice when it contains one byte as illustrated by the two examples below.

Input data

0x14

8-bit

00010100

6-bit

000101 000000

Decimal

5 0

Encoding

F A = =

Input data

0x14b9

8-bit

00010100 11111011

6-bit

000101 001111 101100

Decimal

5 15 44

Encoding

F P s =

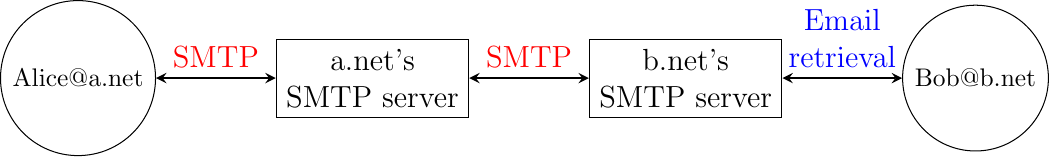

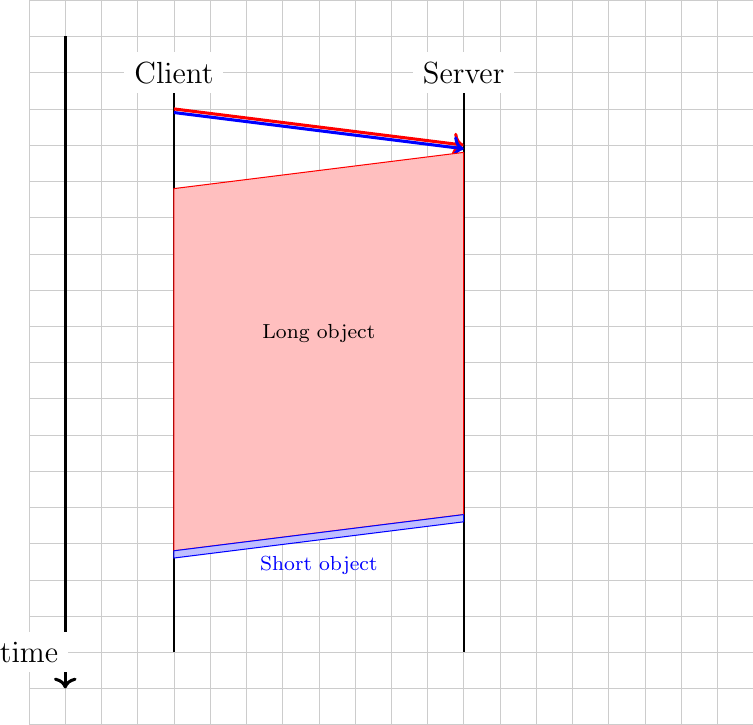

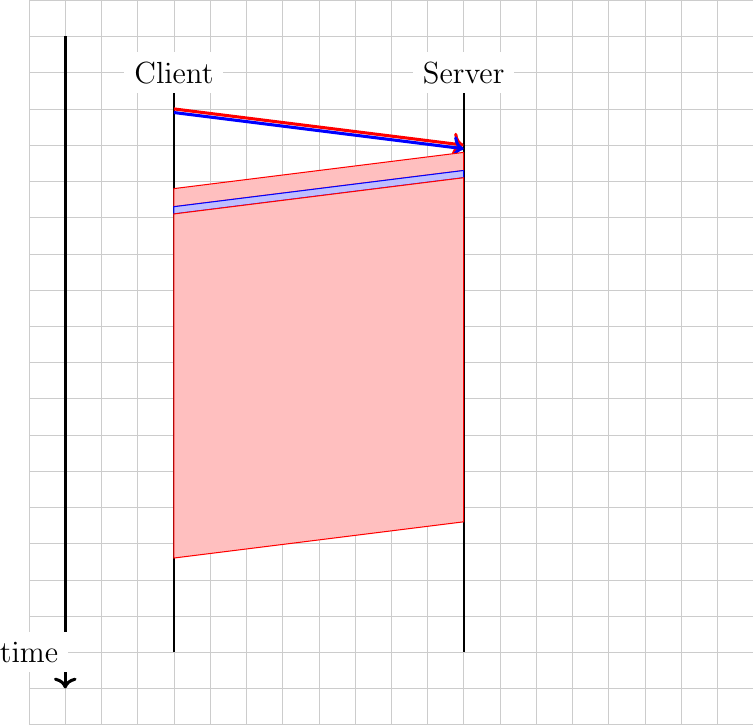

Now that we have explained the format of the email messages, we can discuss how these messages can be exchanged through the Internet. Fig. 30 illustrates the protocols that are used when Alice sends an email message to Bob. Alice prepares her email with an email client or on a webmail interface. To send her email to Bob, Alice’s client will use the Simple Mail Transfer Protocol (SMTP) to deliver her message to her SMTP server. Alice’s email client is configured with the name of the default SMTP server for her domain. There is usually at least one SMTP server per domain. To deliver the message, Alice’s SMTP server must find the SMTP server that contains Bob’s mailbox. This can be done by using the Mail eXchange (MX) records of the DNS. A set of MX records can be associated to each domain. Each MX record contains a numerical preference and the fully qualified domain name of a SMTP server that is able to deliver email messages destined to all valid email addresses of this domain. The DNS can return several MX records for a given domain. In this case, the server with the lowest numerical preference is used first RFC 2821. If this server is not reachable, the second most preferred server is used etc. Bob’s SMTP server will store the message sent by Alice until Bob retrieves it using a webmail interface or protocols such as the Post Office Protocol (POP) or the Internet Message Access Protocol (IMAP).

Fig. 30 Email delivery protocols

The Simple Mail Transfer Protocol#

The Simple Mail Transfer Protocol (SMTP) defined in RFC 5321 is a client-server protocol. The SMTP specification distinguishes between five types of processes involved in the delivery of email messages. Email messages are composed on a Mail User Agent (MUA). The MUA is usually either an email client or a webmail. The MUA sends the email message to a Mail Submission Agent (MSA). The MSA processes the received email and forwards it to the Mail Transmission Agent (MTA). The MTA is responsible for the transmission of the email, directly or via intermediate MTAs to the MTA of the destination domain. This destination MTA will then forward the message to the Mail Delivery Agent (MDA) where it will be accessed by the recipient’s MUA. SMTP is used for the interactions between MUA and MSA [12], MSA-MTA and MTA-MTA.

SMTP is a text-based protocol like many other application-layer protocols on the Internet. It relies on the byte-stream service. Servers listen on port 25. Clients send commands that are each composed of one line of ASCII text terminated by CR+LF. Servers reply by sending ASCII lines that contain a three digit numerical error/success code and optional comments.

The SMTP protocol, like most text-based protocols, is specified as a BNF. The full BNF is defined in RFC 5321. The main SMTP commands are defined by the BNF rules shown in Fig. 31.

Fig. 31 BNF specification of the SMTP commands#

In this BNF, atext corresponds to printable ASCII characters. This BNF rule is defined in RFC 5322. The five main commands are EHLO [13], MAIL FROM:, RCPT TO:, DATA and QUIT. Postmaster is the alias of the system administrator who is responsible for a given domain or SMTP server. All domains must have a Postmaster alias. The SMTP responses are defined by the BNF shown in Fig. 32.

Fig. 32 BNF specification of the SMTP responses#

SMTP servers use structured reply codes containing three digits and an optional comment. The first digit of the reply code indicates whether the command was successful or not. A reply code of 2xy indicates that the command has been accepted. A reply code of 3xy indicates that the command has been accepted, but additional information from the client is expected. A reply code of 4xy indicates a transient negative reply. This means that for some reason, which is indicated by either the other digits or the comment, the command cannot be processed immediately, but there is some hope that the problem will only be transient. This is basically telling the client to try the same command again later. In contrast, a reply code of 5xy indicates a permanent failure or error. In this case, it is useless for the client to retry the same command later. Other application layer protocols such as FTP RFC 959 or HTTP RFC 2616 use a similar structure for their reply codes. Additional details about the other reply codes may be found in RFC 5321.

Examples of SMTP reply codes include the following :

220 <domain> Service ready

221 <domain> Service closing transmission channel

250 Requested mail action okay, completed

354 Start mail input; end with <CRLF>.<CRLF>

421 <domain> Service not available, closing transmission channel

450 Requested mail action not taken: mailbox unavailable

452 Requested action not taken: insufficient system storage

500 Syntax error, command unrecognized

501 Syntax error in parameters or arguments

502 Command not implemented

503 Bad sequence of commands

550 Requested action not taken: mailbox unavailable

Reply code 220 is used by the server as the first message when it agrees to interact with the client. Reply code 221 is sent by the server before closing the underlying transport connection. Reply code 250 is the standard positive reply that indicates the success of the previous command. Reply code 354 indicates that the client can start transmitting its email message. Reply code 421 is returned when there is a problem (e.g. lack of memory/disk resources) that prevents the server from accepting the transport connection. Reply codes 450 and 452 indicate that the destination mailbox is temporarily unavailable, for various reasons, while reply code 550 indicates that the mailbox does not exist or cannot be used for policy reasons. The 500 to 503 reply codes correspond to errors in the commands sent by the client. The 503 reply code would be sent by the server when the client sends commands in an incorrect order (e.g. the client tries to send an email before providing the destination address of the message).

The transfer of an email message is performed in three phases. During the first phase, the client opens a transport connection with the server. Once the connection has been established, the client and the server exchange greetings messages (EHLO command). Most servers insist on receiving valid greeting messages and some of them drop the underlying transport connection if they do not receive a valid greeting. Once the greetings have been exchanged, the email transfer phase can start. During this phase, the client transfers one or more email messages by indicating the email address of the sender (MAIL FROM: command), the email address of the recipient (RCPT TO: command) followed by the headers and the body of the email message (DATA command). Once the client has finished sending all its queued email messages to the SMTP server, it terminates the SMTP association (QUIT command).

A successful transfer of an email message is shown below

S: 220 smtp.example.com ESMTP MTA information

C: EHLO mta.example.org

S: 250 Hello mta.example.org, glad to meet you

C: MAIL FROM:<alice@example.org>

S: 250 Ok

C: RCPT TO:<bob@example.com>

S: 250 Ok

C: DATA

S: 354 End data with <CR><LF>.<CR><LF>

C: From: "Alice Doe" <alice@example.org>

C: To: Bob Smith <bob@example.com>

C: Date: Mon, 9 Mar 2010 18:22:32 +0100

C: Subject: Hello

C:

C: Hello Bob

C: This is a small message containing 4 lines of text.

C: Best regards,

C: Alice

C: .

S: 250 Ok: queued as 12345

C: QUIT

S: 221 Bye

In the example above, the MTA running on mta.example.org opens a TCP connection to the SMTP server on host smtp.example.com. The lines prefixed with S: (resp. C:) are the responses sent by the server (resp. the commands sent by the client). The server sends its greetings as soon as the TCP connection has been established. The client then sends the EHLO command with its fully qualified domain name. The server replies with reply-code 250 and sends its greetings. The SMTP association can now be used to exchange an email.

To send an email, the client must first provide the address of the recipient with RCPT TO:. Then it uses the MAIL FROM: with the address of the sender. Both the recipient and the sender are accepted by the server. The client can now issue the DATA command to start the transfer of the email message. After having received the 354 reply code, the client sends the headers and the body of its email message. The client indicates the end of the message by sending a line containing only the . (dot) character [14]. The server confirms that the email message has been queued for delivery or transmission with a reply code of 250. The client issues the QUIT command to close the session and the server confirms with reply-code 221, before closing the TCP connection.

Note

Open SMTP relays and spam

Since its creation in 1971, email has been a very useful tool that is used by many users to exchange lots of information. In the early days, all SMTP servers were open and anyone could use them to forward emails towards their final destination. Unfortunately, over the years, some unscrupulous users have found ways to use email for marketing purposes or to send malware. The first documented abuse of email for marketing purposes occurred in 1978 when a marketer who worked for a computer vendor sent a marketing email to many ARPANET users. At that time, the ARPANET could only be used for research purposes and this was an abuse of the acceptable use policy. Unfortunately, given the extremely low cost of sending emails, the problem of unsolicited emails has not stopped. Unsolicited emails are now called spam and a study carried out by ENISA in 2009 reveals that 95% of email was spam and this number seems to continue to grow. This places a burden on the email infrastructure of Internet Service Providers and large companies that need to process many useless messages.

Given the amount of spam messages, SMTP servers are no longer open RFC 5068. Several extensions to SMTP have been developed in recent years to deal with this problem. For example, the SMTP authentication scheme defined in RFC 4954 can be used by an SMTP server to authenticate a client. Several techniques have also been proposed to allow SMTP servers to authenticate the messages sent by their users RFC 4870 RFC 4871 .

The Post Office Protocol#

When the first versions of SMTP were designed, the Internet was composed of minicomputers that were used by an entire university department or research lab. These minicomputers were used by many users at the same time. Email was mainly used to send messages from a user on a given host to another user on a remote host. At that time, SMTP was the only protocol involved in the delivery of the emails as all hosts attached to the network were running an SMTP server. On such hosts, an email destined to local users was delivered by placing the email in a special directory or file owned by the user. However, the introduction of personal computers in the 1980s changed this environment. Initially, users of these personal computers used applications such as telnet to open a remote session on the local minicomputer to read their email. This was not user-friendly. A better solution appeared with the development of user friendly email client applications on personal computers. Several protocols were designed to allow these client applications to retrieve the email messages destined to a user from his/her server. Two of these protocols became popular and are still used today. The Post Office Protocol (POP), defined in RFC 1939, is the simplest one. It allows a client to download all the messages destined to a given user from his/her email server. We describe POP briefly in this section. The second protocol is the Internet Message Access Protocol (IMAP), defined in RFC 3501. IMAP is more powerful, but also more complex than POP. IMAP was designed to allow client applications to efficiently access, in real-time, to messages stored in various folders on servers. IMAP assumes that all the messages of a given user are stored on a server and provides the functions that are necessary to search, download, delete or filter messages.

POP is another example of a simple line-based protocol. POP runs above the bytestream service. A POP server usually listens to port 110. A POP session is composed of three parts : an authorisation phase during which the server verifies the client’s credential, a transaction phase during which the client downloads messages and an update phase that concludes the session. The client sends commands and the server replies are prefixed by +OK to indicate a successful command or by -ERR to indicate errors.

When a client opens a transport connection with the POP server, the latter sends as banner an ASCII-line starting with +OK. The POP session is at that time in the authorisation phase. In this phase, the client can send its username (resp. password) with the USER (resp. PASS) command. The server replies with +OK if the username (resp. password) is valid and -ERR otherwise.

Once the username and password have been validated, the POP session enters in the transaction phase. In this phase, the client can issue several commands. The STAT command is used to retrieve the status of the server. Upon reception of this command, the server replies with a line that contains +OK followed by the number of messages in the mailbox and the total size of the mailbox in bytes. The RETR command, followed by a space and an integer, is used to retrieve the nth message of the mailbox. The DELE command is used to mark for deletion the nth message of the mailbox.

Once the client has retrieved and possibly deleted the emails contained in the mailbox, it must issue the QUIT command. This command terminates the POP session and allows the server to delete all the messages that have been marked for deletion by using the DELE command.

The figure below provides a simple POP session. All lines prefixed with C: (resp. S:) are sent by the client (resp. server).

S: +OK POP3 server ready

C: USER alice

S: +OK

C PASS 12345pass

S: +OK alice's maildrop has 2 messages (620 octets)

C: STAT

S: +OK 2 620

C: LIST

S: +OK 2 messages (620 octets)

S: 1 120

S: 2 500

S: .

C: RETR 1

S: +OK 120 octets

S: <the POP3 server sends message 1>

S: .

C: DELE 1

S: +OK message 1 deleted

C: QUIT

S: +OK POP3 server signing off (1 message left)

In this example, a POP client contacts a POP server on behalf of the user named alice. Note that in this example, Alice’s password is sent in clear by the client. This implies that if someone is able to capture the packets sent by Alice, he will know Alice’s password [15]. Then Alice’s client issues the STAT command to know the number of messages that are stored in her mailbox. It then retrieves and deletes the first message of the mailbox.

The world wide web#

In the early days, the Internet was mainly used for remote terminal access with telnet, email and file transfer. The default file transfer protocol, FTP, defined in RFC 959 was widely used. FTP clients and servers are still included in some operating systems.

Many FTP clients offered a user interface similar to a Unix shell and allowed clients to browse the file system on the server and to send and retrieve files. FTP servers can be configured in two modes :

authenticated : in this mode, the ftp server only accepts users with a valid user name and password. Once authenticated, they can access the files and directories according to their permissions

anonymous : in this mode, clients supply the anonymous user identifier and their email address as password. These clients are granted access to a special zone of the file system that only contains public files.

FTP was very popular in the 1990s and early 2000s, but today it has mostly been superseded by more recent protocols. Authenticated access to files is mainly done by using the Secure Shell (ssh) protocol defined in RFC 4251 and supported by clients such as scp or sftp. Nowadays, anonymous access is mainly provided by web protocols.

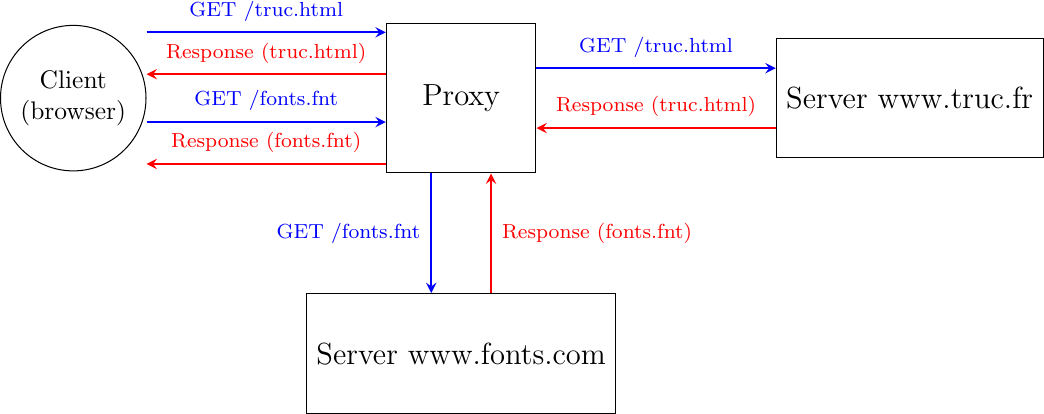

In the late 1980s, high energy physicists working at CERN had to efficiently exchange documents about their ongoing and planned experiments. Tim Berners-Lee evaluated several of the documents sharing techniques that were available at that time [B1989]. As none of the existing solutions met CERN’s requirements, they chose to develop a completely new document sharing system. This system was initially called the mesh. It was quickly renamed the world wide web. The starting point for the world wide web are hypertext documents. An hypertext document is a document that contains references (hyperlinks) to other documents that the reader can immediately access. Hypertext was not invented for the world wide web. The idea of hypertext documents was proposed in 1945 [Bush1945] and the first experiments were done during the 1960s [Nelson1965] [Myers1998] . Compared to the hypertext documents that were used in the late 1980s, the main innovation introduced by the world wide web was to allow hyperlinks to reference documents stored on different remote machines. This is illustrated in Fig. 33.

Fig. 33 World-wide web clients and servers

A document sharing system such as the world wide web is composed of three important parts.

A standardized addressing scheme that unambiguously identifies documents

A standard document format : the HyperText Markup Language

A standardized protocol to efficiently retrieve the documents stored on a server

Note

Open standards and open implementations

Open standards play a key role in the success of the world wide web as we know it today. Without open standards, the world wide web would have never reached its current size. In addition to open standards, another important factor for the success of the web was the availability of open and efficient implementations of these standards. When CERN started to work on the web, their objective was to build a running system that could be used by physicists. They developed open-source implementations of the first web servers and web clients. These open-source implementations were powerful and could be used as is, by institutions willing to share information. They were also extended by other developers who contributed to new features. For example, the NCSA added support for images in their Mosaic browser that was eventually used to create Netscape Communications and the first commercial browsers and servers.

The first components of the world wide web are the Uniform Resource Identifiers (URI), defined in RFC 3986. A URI is a character string that unambiguously identifies a resource on the world wide web. Here is a subset of the BNF for URIs

URI = scheme ":" "//" authority path [ "?" query ] [ "#" fragment ]

scheme = ALPHA *( ALPHA / DIGIT / "+" / "-" / "." )

authority = [ userinfo "@" ] host [ ":" port ]

query = *( pchar / "/" / "?" )

fragment = *( pchar / "/" / "?" )

pchar = unreserved / pct-encoded / sub-delims / ":" / "@"

query = *( pchar / "/" / "?" )

fragment = *( pchar / "/" / "?" )

pct-encoded = "%" HEXDIG HEXDIG

unreserved = ALPHA / DIGIT / "-" / "." / "_" / "~"

reserved = gen-delims / sub-delims

gen-delims = ":" / "/" / "?" / "#" / "[" / "]" / "@"

sub-delims = "!" / "$" / "&" / "'" / "(" / ")" / "*" / "+" / "," / ";" / "="

The first component of a URI is its scheme. A scheme can be seen as a selector, indicating the meaning of the fields after it. In practice, the scheme often identifies the application-layer protocol that must be used by the client to retrieve the document, but it is not always the case. Some schemes do not imply a protocol at all and some do not indicate a retrievable document [16]. The most frequent schemes are http and https. We focus on http in this section. A URI scheme can be defined for almost any application layer protocol [17]. The characters : and // follow the scheme of any URI.

The second part of the URI is the authority. With retrievable URIs, this includes the DNS name or the IP address of the server where the document can be retrieved using the protocol specified via the scheme. This name can be preceded by some information about the user (e.g. a user name) who is requesting the information. Earlier definitions of the URI allowed the specification of a user name and a password before the @ character (RFC 1738), but this is now deprecated as placing a password inside a URI is insecure. The host name can be followed by the semicolon character and a port number. A default port number is defined for some protocols and the port number should only be included in the URI if a non-default port number is used (for other protocols, techniques like service DNS records can used).

The third part of the URI is the path to the document. This path is structured as filenames on a Unix host (but it does not imply that the files are indeed stored this way on the server). If the path is not specified, the server will return a default document. The last two optional parts of the URI are used to provide a query parameter and indicate a specific part (e.g. a section in an article) of the requested document. Sample URIs are shown below.

http://tools.ietf.org/html/rfc3986.html

mailto:infobot@example.com?subject=current-issue

http://docs.python.org/library/basehttpserver.html?highlight=http#BaseHTTPServer.BaseHTTPRequestHandler

telnet://[2001:db8:3080:3::2]:2323/

ftp://cnn.example.com&story=breaking_news@10.0.0.1/top_story.htm

The first URI corresponds to a document named rfc3986.html that is stored on the server named tools.ietf.org and can be accessed by using the http protocol on its default port. The second URI corresponds to an email message, with subject current-issue, that will be sent to user infobot in domain example.com. The mailto: URI scheme is defined in RFC 2368. The third URI references the portion BaseHTTPServer.BaseHTTPRequestHandler of the document basehttpserver.html that is stored in the library directory on the docs.python.org server. This document can be retrieved by using the http protocol. The query parameter highlight=http is associated to this URI. The fourth example is a server that operates the telnet protocol, uses IPv6 address 2001:db8:3080:3::2 and is reachable on port 2323. The last URI is somewhat special. Most users will assume that it corresponds to a document stored on the cnn.example.com server. However, to parse this URI, it is important to remember that the @ character is used to separate the user name from the host name in the authorization part of a URI. This implies that the URI points to a document named top_story.htm on the host having IPv4 address 10.0.0.1. The document will be retrieved by using the ftp protocol with the user name set to cnn.example.com&story=breaking_news.

The second component of the word wide web is the HyperText Markup Language (HTML). HTML defines the format of the documents that are exchanged on the web. The first version of HTML was derived from the Standard Generalized Markup Language (SGML) that was standardized in 1986 by ISO. SGML was designed to support large documents maintained by government, law firms or aerospace companies that must be shared efficiently in a machine-readable manner. These industries require documents to remain readable and editable for tens of years and insisted on a standardized format supported by multiple vendors. Today, SGML is no longer widely used beyond specific applications, but its descendants including HTML and XML are now widespread.

A markup language is a structured way of adding annotations about the formatting of the document within the document itself. Example markup languages include troff, which is used to write the Unix man pages or Latex. HTML uses markers to annotate text and a document is composed of HTML elements. Each element is usually composed of three parts: a start tag that potentially includes some specific attributes, some text (often including other elements), and an end tag. A HTML tag is a keyword enclosed in angle brackets. The generic form of an HTML element is

<tag>Some text to be displayed</tag>

More complex HTML elements can also include optional attributes in the start tag

<tag attribute1="value1" attribute2="value2">some text to be displayed</tag>